Dealing with high-dimensional data

Sketching & the JL-Lemma

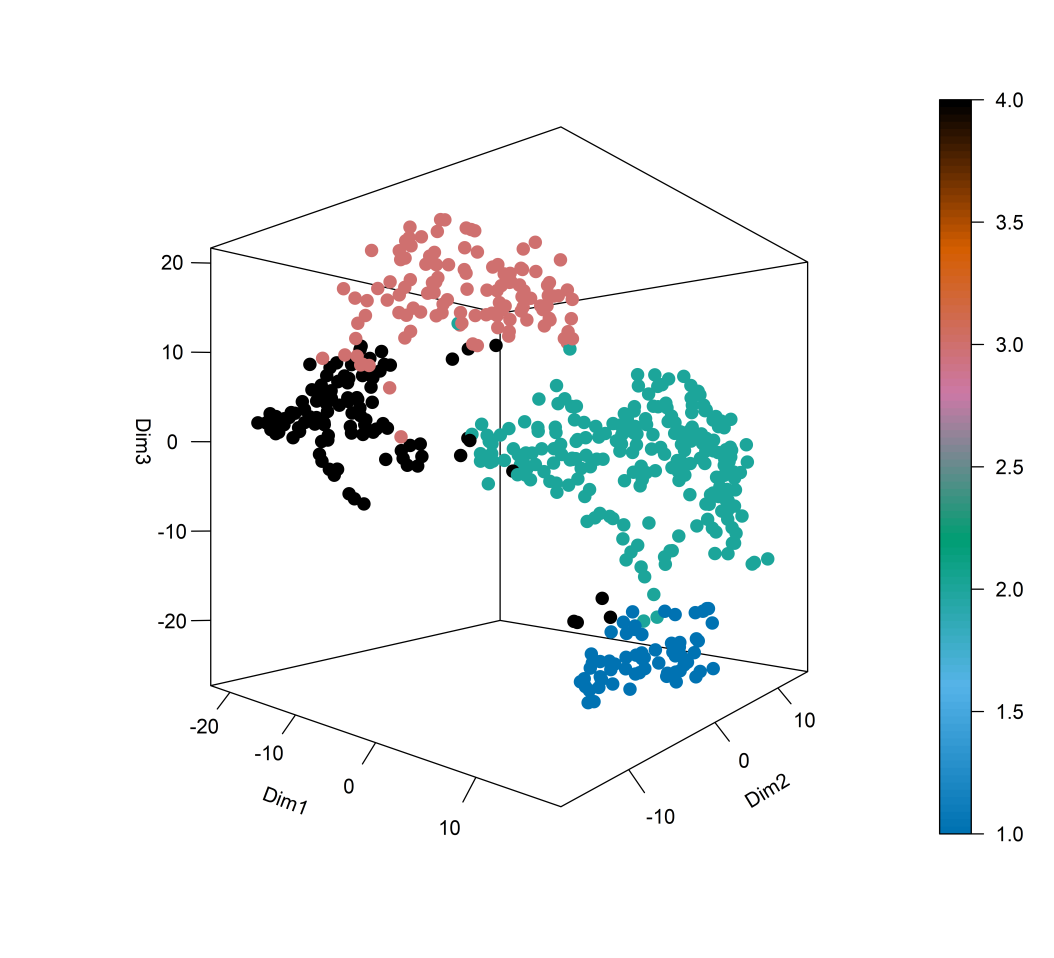

It’s extremely common in computer science to be dealing with very high-dimensional vectors. A natural question is can we sketch, or reduce the dimensionality, of these vectors while still maintaining the larger structure of the data. A common application of this is clustering, where we cluster datapoints based on their distances to other data. For this kind of application, we need to be able to maintain the pairwise distances between any two datapoints.

In particular, given vectors $v_1, \dots, v_n \in \mathbb{R}^d$, where the dimension $d$ is large, we want to find a function $f: \mathbb{R}^d \to \mathbb{R}^m$ where $m \ll d$ and pairwise distances are preserved. In other words, we want

Definition ($\epsilon$ Pairwise Distance Property) For all pairs $v_i, v_j \in (v_1, \dots, v_n)$ and some small $\epsilon$

which tells us that $f$ should be able to preserve the distance between any two vectors with greater and greater accuracy as our parameter $\epsilon$ approaches 0.

Theorem (Johnson-Lindenstrauss) For $m > O(\log(n)/\epsilon^2)$, there exists $f$ satisfying the equation above. This means that $n$ points in a high dimensional space can be mapped onto $m$ dimensions and still preserve pairwise distance. Actually, it turns out $f$ is a linear map and can be computed in a computationally efficient way.

Algorithm idea. Our first attempt at dimensionality reduction may involve trying to choose some subset of coordinates to include from each vector at random. However, this attempt fails because the accuracy depends a lot on the basis the vectors are represented in. The actual idea is as follows. We are going to generate a vector $g \in \mathbb{R}^d$ that points in a random direction and take the product $\langle v, g \rangle$. We do this $m = \mathcal{O}(\log(n))$ times, storing each result in a vector $\in \mathbb{R}^m$. The main intuition behind this is that $\langle v, g \rangle = |v||g|\cos(\theta)\propto |v|$, and that viewing the vector from many different directions gives you a lot of information about the vector.

The way we generate a vector in a random direction is by generating a vector where each individual entry is i.i.d from a Gaussian $\mathcal{N}(0, 1)$. We won’t prove why this works, but the intuition is that applying any orthogonal matrix (i.e. a distance-preserving linear mapping, where $U^TU = I$) to a Gaussian random vector leads to another random vector with the same distribution. Since rotation preserves distances, the distribution is invariant to rotation, so it must be that the Gaussian random vectors are uniformly distributed across all directions. Putting this all together, this gives rise to the following algorithm.

Algorithm. Build the matrix $A \in \mathbb{R}^{m \times d}$ where each row is a random Gaussian vector, which just means that each entry $A_{ij}\sim \mathcal{N}(0, 1)$, and then scale each entry by $\frac{1}{\sqrt{m}}$. Then compute $f(v) = Av$, which takes the product of all the scaled, random $d$-dimensional Gaussian row vectors.

Johnson-Lindenstrauss Property

Definition ($(\epsilon,\delta)$ JL Property) A distribution over matrices $A \in \mathbb{R}^{m\times d}$ satisfies this property if

whenever $m = \mathcal{O}((\log(1/\delta)\big/\epsilon^2)$, for any $v \in \mathbb{R}^d$, the inequality

holds with probability $1 - \delta$.

Remark Notice that if we can show that $A$ satisfies the JL property, then we will be able to prove the JL theorem. Consider picking one of the $\binom{n}{2}$ pairs of vectors $v_i, v_j$, and use that pair to form $v_i - v_j$. Then, we know $|A(v_i - v_j)| = |Av_i - Av_j| = |f(v_i) - f(v_j)|$. If $A$ satisfies the $(\epsilon, \delta)$ JL property, then this pair will preserve distance with probability $(1 - \delta)$, and will fail to preserve distance with probability $\delta$. If we let $F_i$ be an indicator r.v. denoting if our pair fails, then we fail any pair with probability

So choosing $\delta < \delta^*/\binom{n}{2}$, if $A$ satisfies the $(\epsilon, \delta)$ property, then we preserve all pairwise distances with probability $1-\delta^*$, as long as $m = \mathcal{O}((\log(n / \delta)\big/ \epsilon^2)$.

Remark We will show that our matrix $A$ satisfies the property, but it is not the only such matrix. For example, the Rademacher matrices, where each entry is independently chosen to be either $+1/\sqrt{m}$ or $-1/\sqrt{m}$ with equal probability satisfy the property. A random orthogonal matrix will also satisfy the property.

Theorem Our construction of $A$ satisfies the $(\epsilon,\delta)$ JL property

An important fact is the stability of Gaussian r.v.s. If we have two independent Gaussians and take a linear combination of them, $a_1\mathcal{N}(\mu_1, \sigma_1^2) + a_2\mathcal{N}(\mu_2, \sigma_2^2)$ will follow the distribution $\mathcal{N}(a_1\mu_1 + a_2\mu_2,\; a_1^2\sigma_1^2 + a_2^2\sigma_2^2)$. We can use this to show if we take the product of $v$ with some random Gaussian vector $g$, it's distributed as:

Algorithmic Speedup

The naive algorithm to compute all pairwise distances takes $\mathcal{O}((n^2d)$ time, to compute the distance in $\mathcal{O}((d)$ time for $\binom{n}{2}$ pairs. Since the matrix is dimension $\mathcal{O}((\log(n)) \times d$, it takes $\mathcal{O}((d\log(n))$ time to project a vector down, and we do this $n$ times. Once we have the lower dimensional vectors, however, we just need to compute the $\binom{n}{2} = \mathcal{O}((n^2)$ pairwise distances in $\mathcal{O}((\log(n))$ time each. So the algorithm runs in $\mathcal{O}((dn\log(n) + n^2\log(n))$ time, which is good if $d \gg \log(n)$. This says we can get a good approximation much quicker than brute-force!

Subspace Embedding

Let $U$ be a $d$-dimensional space. We’re interested in a $k$-dimensional subspace of this space. Given $v_1, v_2, \dots, v_k \in U$, let $S = \text{span}(v_1, v_2, \dots, v_k)$.

Theorem If we project each $v\in U$ to $Av$, then when $m > (k\log(1/\epsilon) + \log(1/\delta))\big/\epsilon^2$, we have for all $v \in S$

with probability $1-\delta$.

Proof Ideally, we want to apply the union bound over all vectors in the subspace. However, there’s infinitely many vectors, and we can’t do that… The main idea is to look at a nearby neighborhood of each vector, called the $\epsilon$-net.